|

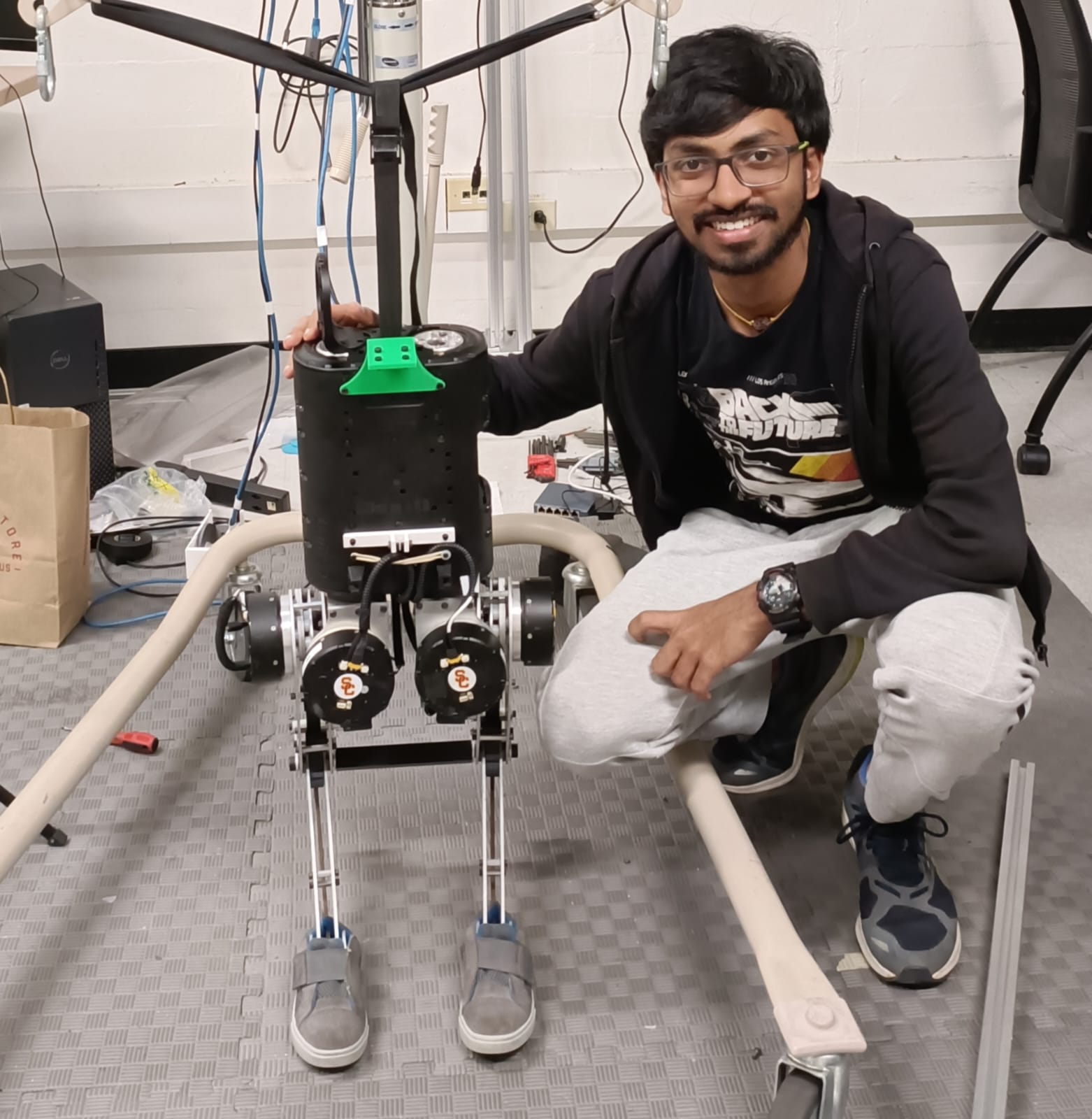

Nikhil Sobanbabu I'm a Masters Student in Electrical and Computer Engineering department at Carnegie Mellon University. I work with Prof. Guanya Shi at the LeCAR Lab on Learning and Control of legged robots, with a current focus on improving the transferability of simulation-trained policies and enabling reliable adaptation during deployment. I also collaborate with Search-Based Planning Lab on Multi-Agent Quadrupeds. Additionally, I also work with Dynamic Robotics and Control Laboratory under the guidance of Prof. Quan Nguyen on Prior-guided Reinforcement Learning for Agile Legged Robot Control. Previously, I received my Bachelors in Electrical Engineering from IIT Madras during which I interned as a Robotics Intern at DiFACTO Robotics and Automation focusing on navigation and recovery of in house AMR. I also worked as an Undergraduate Research Assistant at Control Engineering Laboratory with Dr. Bharath Bhikkaji on Optimal Strategies for 1vN Pursuit-Evasion games and Multi-agent Quadrotor Tracking. Apart from this I was part of Team Anveshak, a student run Mars Rover Team of IIT Madras which participates in University Rover Challenge at Mars Research Desert Station,Utah and worked as an Embedded Systems and Control Engineer, later getting promoted as Team lead for the period 2022-2023. I'm currently looking for PhD positions for Fall 2026. My research statement comprising of my prior research and future interests can be found here. |

|

Research InterestsMy research goal is to develop physics‑aware learning and control frameworks that enable robots to acquire scalable, contact‑rich skills and execute them robustly in the real world. I am particularly interested in Learning‑based control (Agile Locomotion, Loco‑ Manipulation and Human Motion tracking), Real2Sim2Real(System Identification, Dynamics‑Aware sim‑to‑real adaptation, Active Exploration, reality‑gap aware benchmarks) and Multi‑Robot coordination spanning algorithms and training pipelines. |

|

|

Sampling-Based System Identification with Active Exploration for Legged Robot Sim2Real Learning

CoRL 2025 (Oral) (All Strong Accepts) Nikhil Sobanbabu, Guanqi He, Tairan He, Yuxiang Yang, Guanya Shi |

|

|

Preferenced Oracle Guided Multi-mode Policies for Dynamic Bipedal Loco-Manipulation

IROS 2025 Prasanth Ravichandar, Lokesh Rajan, Nikhil Sobanbabu, Quan Nguyen |

|

|

Towards Unstructured MAPF: Multi-Quadruped MAPF Demo

ICAPS 2025 (Demo Track) Rishi Veerapaneni*, Nikhil Sobanbabu*, Guanya Shi, Jiaoyang Li, Maxim Likhachev |

|

|

ASAP: Aligning Simulation and Real-World Physics for Learning Agile Humanoid Whole-Body Skills

RSS 2025 Tairan He, Jiawei Gao, Wenli Xiao, Yuanhang Zhang, Zi Wang, Jiashun Wang, Zhengyi Luo, Guanqi He, Nikhil Sobanbabu, Chaoyi Pan, Zeji Yi, Guannan Qu, Kris Kitani, Jessica Hodgins, Linxi "Jim" Fan, Yuke Zhu, Changliu Liu, Guanya Shi |

|

|

HDMI: Learning Interactive Humanoid Whole-Body Control from Human Videos

Haoyang Weng, Yitang Li,Nikhil Sobanbabu, Zihan Wang, Zhengyi Luo, Tairan He, Deva Ramanan, Guanya Shi |

|

|

OGMP: Oracle Guided Multimodal Policies for Agile and Versatile Robot Control

Lokesh Rajan, Nikhil Sobanbabu, Quan Nguyen, |

|

|

Augmenting Learned Centroidal Controller with Adaptive Force Control

OCRL Project (Spring 2025) Nikhil Sobanbabu, Kailash Jagadeesh, Tony Tao, Bharath Sateeshkumar Improving Payload Adaptability of CaJun controller by Augmenting it with ℒ1 Adaptive Control. |

|

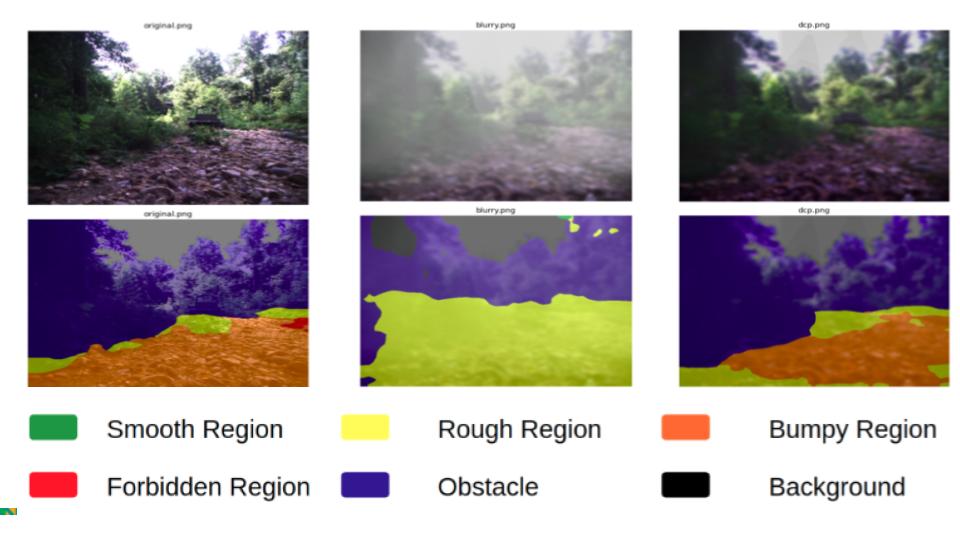

Terrain Traversability Analysis with Image Dehazing

Deep Learning Project (Fall 2024) Nikhil Sobanbabu, Woojin Kim, Ritarka Samanta Investigation of different image dehazing techniques to improve terrain traversability analysis for Autonomous vehicles. |

|

|

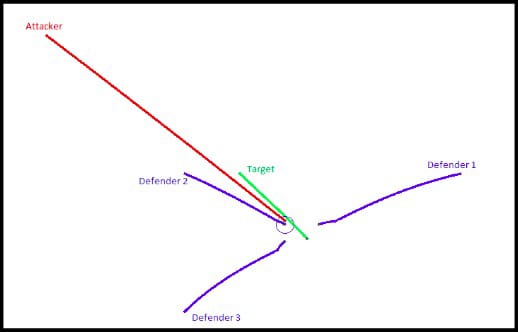

Single-Pursuer Multiple-Evader Target-Guarding-Game

Optimal Strategies found for a 1vn game by solving the HJI-PDE equation for a 1vn game with a reach-avoid objective. |

|

|

Multi-agent trajectory tracking for Crazyflie Quadrotors

Coordinated autonomous control of multi-agent quadrotors in a regular polygon formation with varied orientations using the in-built mellinger controller. |

|

|

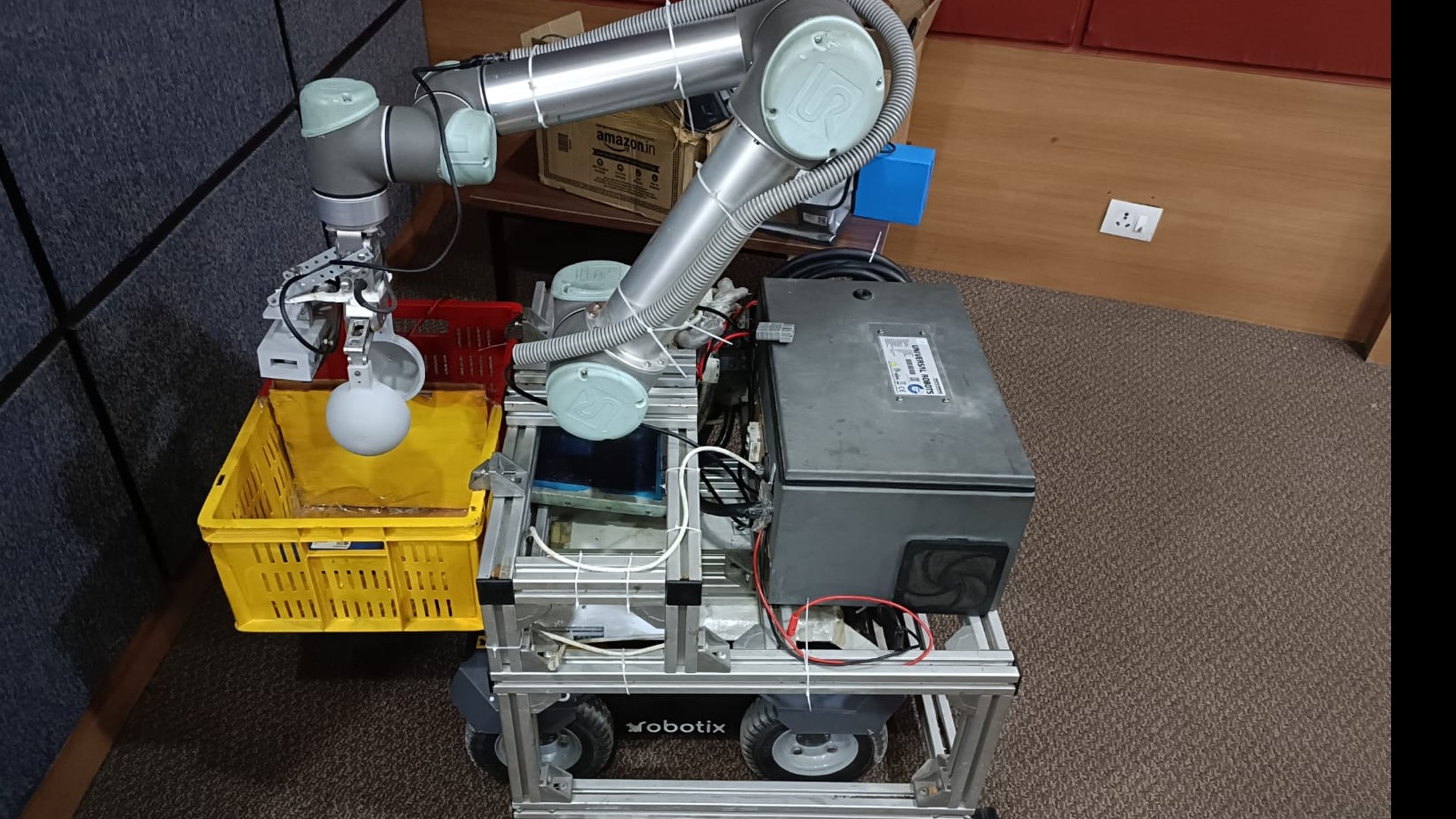

Control of 5DoF Manipulator of a Mars Rover as part of Team Anveshak

Nikhil Sobanbabu,Shivendra Verma Autonomous control of Manipulator using inverse kinematics and ROS moveit. |

|

E-yantra Robotics Competition. Theme: Krishi-bot

Nikhil Sobanbabu, Gokul MK Autonomous navigation of an Agricultural robot to identify and pick and place bellpepers inside a greenhouse. |

|

|

Receding-Horizon mode planner for mode planning against perturbations

Nikhil Sobanbabu, Lokesh Krishna Planner chooser behaviours encoded as latent modes using monte-carlo roll outs to be robust against perturbations generating emergent transitions between modes/behaviors. |

|

Multi-agent Game Theoretic Framwork for Target-Attacker-Defender game

Nikhil Sobanbabu, Shivendra Verma Simulation environment for the single attacker, singe target, multiple defender pursuit evation differntial game. |

|

|

Swing-up and Stabilisation of inverted pendulum.

Course EE6415 Non-Linear System Analysis Swing-up is done using a control from an energy based Lyapunov function. After a reaching an appropriate angle, pole-placement based stabilisation kicks in. |

|

|

Motion planning for a KUKA mobile Manipulator

Course ED5215 Intro to Motion Planning Nikhil Sobanbabu, Balaji R, Kanishkan M S

Problem Statement: Optimal pick and place of multiple objects to a given destination with payload constraints for the mobile manipulator.

|

Fun Mini-Project |

|

|

Why WASD when you can play minecraft with ESP32 |

Miscellaneous |

|

|

Tech and Innovation Fair, coordinator 2022.

Flagship event of Shaastra, IITM's Technical festival for the year 2022. Participants convert a prototype to a minimum viable product. |

|

|

| Discussion with Bob-Balram (Chief Engineer of Ingenuity Helicopter) | Former Indian Defence Secretary, Ajay Kumar |

|

|

Template from jonbarron. |